Dr. Yong-Ju Lee is the head of the Visual Intelligence Research Department at the Electronics and Telecommunications Research Institute (ETRI) in South Korea and adjunct professor in Korea National University of Science and Technology. He received a Ph.D. in computer engineering from the Chungnam National University(CNU), South Korea. He was a visiting scholar at the University of Virginia Tech and planning committee/RFP member(‘19~’23:AI,’24: AI Safety R&D, AGI) of the ministry of science and ICT in Korea. With a distinguished career in visual intelligence research, Dr. Lee has made significant contributions to the field and continues to lead innovative projects that advance multimedia technologies, such as generative visual intelligence(KOALA, Ko-LLaVA) and visual AI agent collaboration(CLARA). His research interests include Artificial General Intelligence(AGI), Generative AI, Edge-based model compression, Human-level visual perception, Crossmodal/Multimodal learning.

🎉 Visual Intelligence Lab [Homepage]

- (2025) : We have 11 papers this year(3 SCI-E, 2 ICCV, 1 ACM MM, 4 AVSS).

– (2025/10): Three papers accepted to ICCV Workshop 2024 (Jo Youngjoo, Ilchae Jung, Soojin Jang)

– (2025/10): One paper accepted to Computers and Electrical Engineering (Ham Jaeseok)

– (2025/10): One paper accepted to ACM MM (Park Minho)

– (2025/09): One paper accepted to MDPI AI (Sanghun Jeon)

– (2025/07): Four papers accepted to AVSS 2025 (Kim Daehoe, Oh Seongchan, Yongjin Kwon, Kimin Yun)

– (2025/05): One paper accepted to Knowledge-based Systems (Ham Jaeseok) - (2024) : We have 19 papers this year(2 ETRI J., 5 CVPR, 2 AVSS, 1 ICML, 6 ECCV, 3 NeurIPS).

– (2024/12): Three papers accepted to NeurIPS 2024 (Kwanyong Park, Youngwan Lee, Ham Jaeseok)

– (2024/09): Six papers accepted to ECCV 2024 (Youngwan Lee, Kwanyong Park, Lee Seongwon, Min jinyoung, Ham Jaeseok, Kim Hyungil)

– (2024/08): One paper accepted to ICML 2024 (Youngwan Lee)

– (2024/07): Two papers accepted to AVSS 2024 (Kim Daehoe, Oh Seongchan)

– (2024/06): Five papers accepted to CVPR 2024 (Kwanyong Park, Youngwan Lee, Bae Kangmin, Jo Youngjoo)

– (2024/02): Two papers accepted to ETRI J. (Jeong Junyoung, Jeon Sanghun) - (2023) : We have 9 papers this year(1 AAAI, 1 ICLR, 4 CVPR, 1 ICCV, 1 NeurIPS, 1 ETRI J.).

– (2023/12): One paper accepted to NeurIPS 2023 (Youngwan Lee)

– (2023/10): One paper accepted to ICCV 2023 (Park Minho), One paper accepted to ETRI J. (Yun Kimin)

– (2023/06): Four papers accepted to CVPR 2023 (Kim Jonghee, Ham Jaeseok, Seol Mooah, Bae Kangmin)

– (2023/05): One paper accepted to ICLR 2023 (Youngwan Lee)

– (2023/02): One paper accepted to AAAI 2023 (Jongryul Lee) - (2022) : We have 6 papers this year(1 ETRI J., 2 CVPR, 2 ECCV, 1 BMVC).

– (2022/11): One paper accepted to BMVC 2022 (Jongryul Lee)

– (2022/10): Two papers accepted to ECCV 2022 (Ham Jaeseok, Youngwan Lee)

– (2022/06): Two papers accepted to CVPR 2022 (Youngwan Lee, Moon Jinyoung)

– (2022/04): One paper accepted to ETRI J. (Chunhee Lee)

🎖 Projects

-

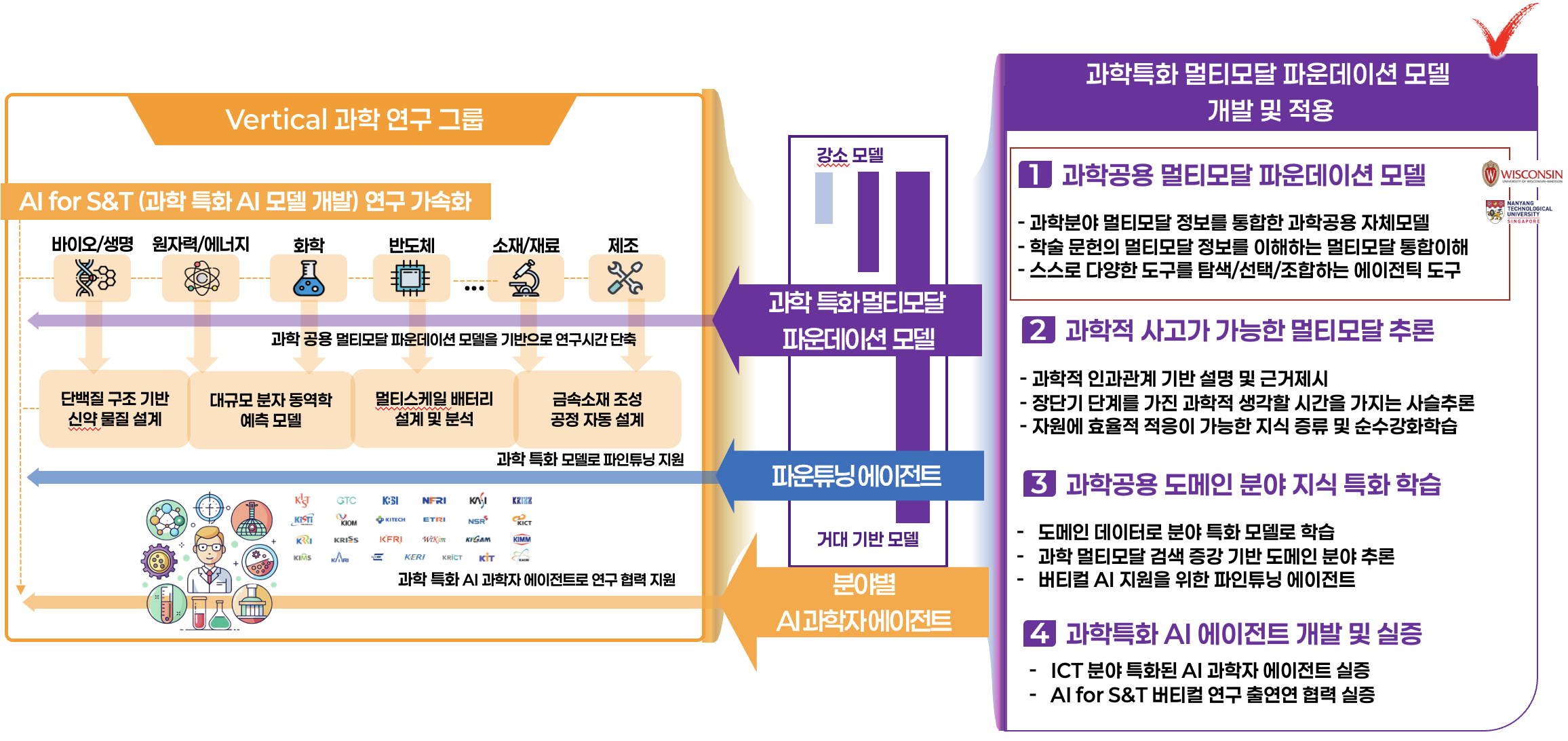

과학특화 멀티모달 파운데이션 모델 개발 및 적용

Yong-Ju Lee (Co-Investigator, ‘26~’30)

– We are responsible for the core capabilities of the multimodal foundation model—namely multimodal understanding and generation, as well as agent-based applications—within a science-specialized multimodal foundation model project.

– In particular, we are conducting international collaborative research with Professor Yongjae Lee at the University of Wisconsin–Madison and Professor Jaehong Yoon at Nanyang Technological University, Singapore.

-

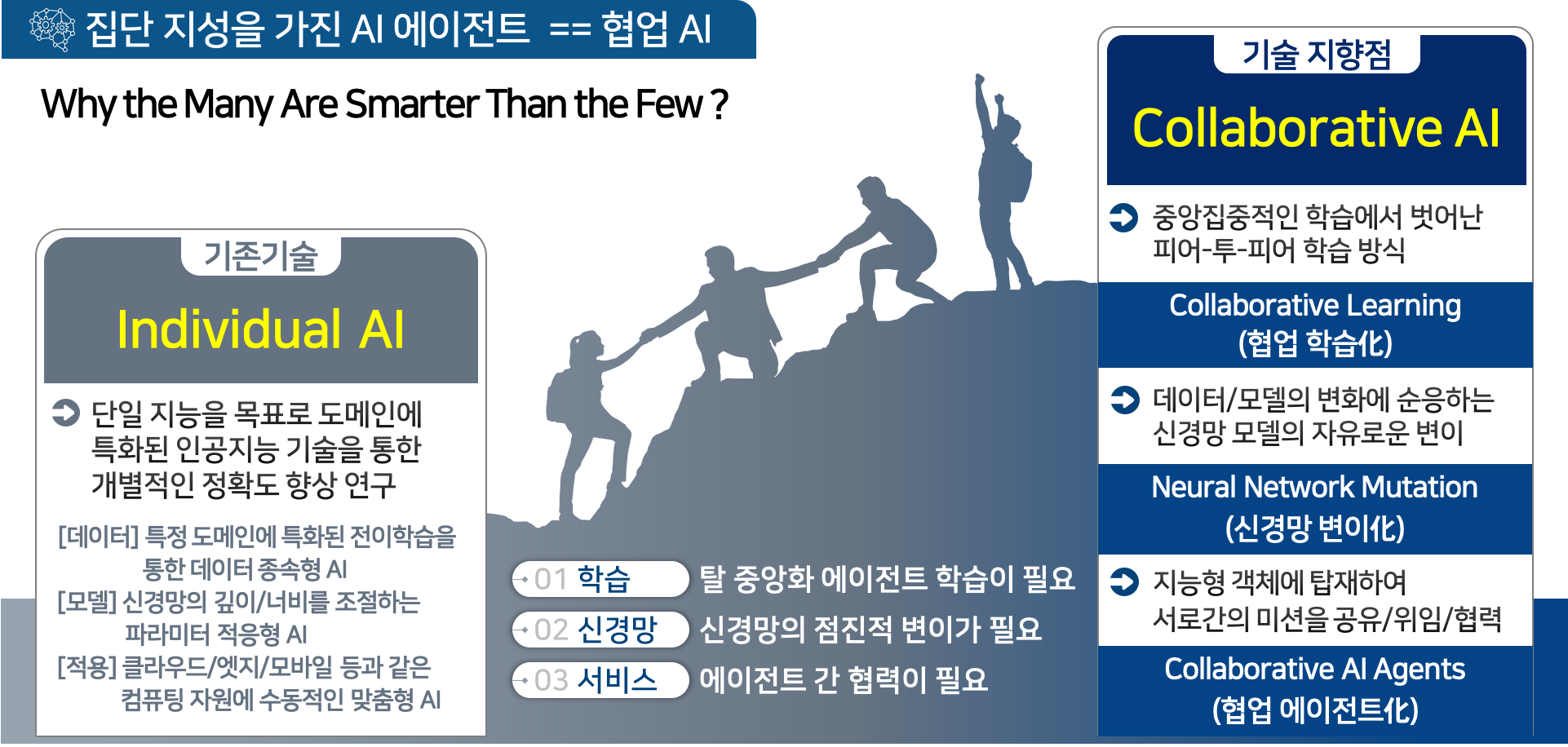

Development of AI Autonomy and Knowledge Enhancement for AI Agent Collaboration

Yong-Ju Lee (Project Investigator, ‘22~’26) [Homepage]

– This research is exploring the underlying technology that allows agents to see, hear, speak, and interact with each other. In particular, we are exploring various services through cooperative learning from a robot perspective.

-

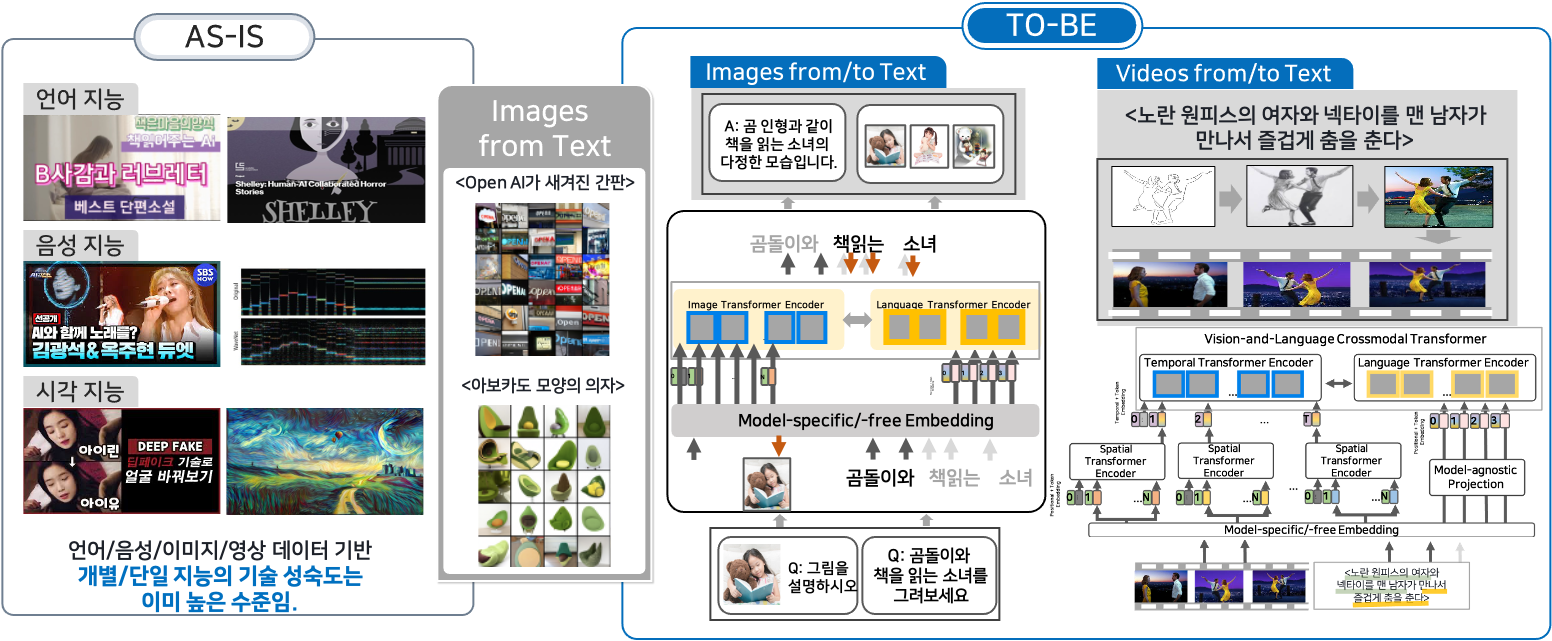

Development of Large Korean Language Model Technology for Efficient Pre-training

Yong-Ju Lee (Project Investigator, ‘22~’25) [Homepage]

– The main purpose of this study is to develop an efficient dictionary learning model based on Korean language. In particular, various research attempts are being made to create and understand visual language models. The models we developed are KOALA (Text-to-Image) model and LLaVA (Large Language and Vision Assistant) model.

-

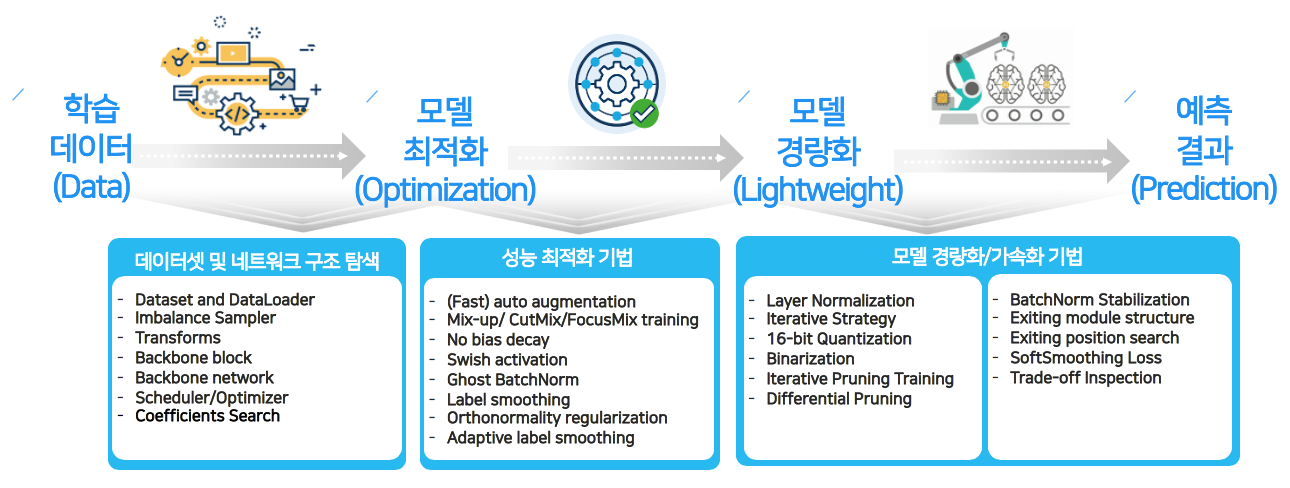

Development of Model Optimization and Lightweight based on Edge AI Technology

Yong-Ju Lee (Project Investigator, ‘20) [Homepage]

– The main purpose of this research is to implement a lightweighting process for AI models. In particular, the challenge was carried out through various studies such as model compression and model pruning.

-

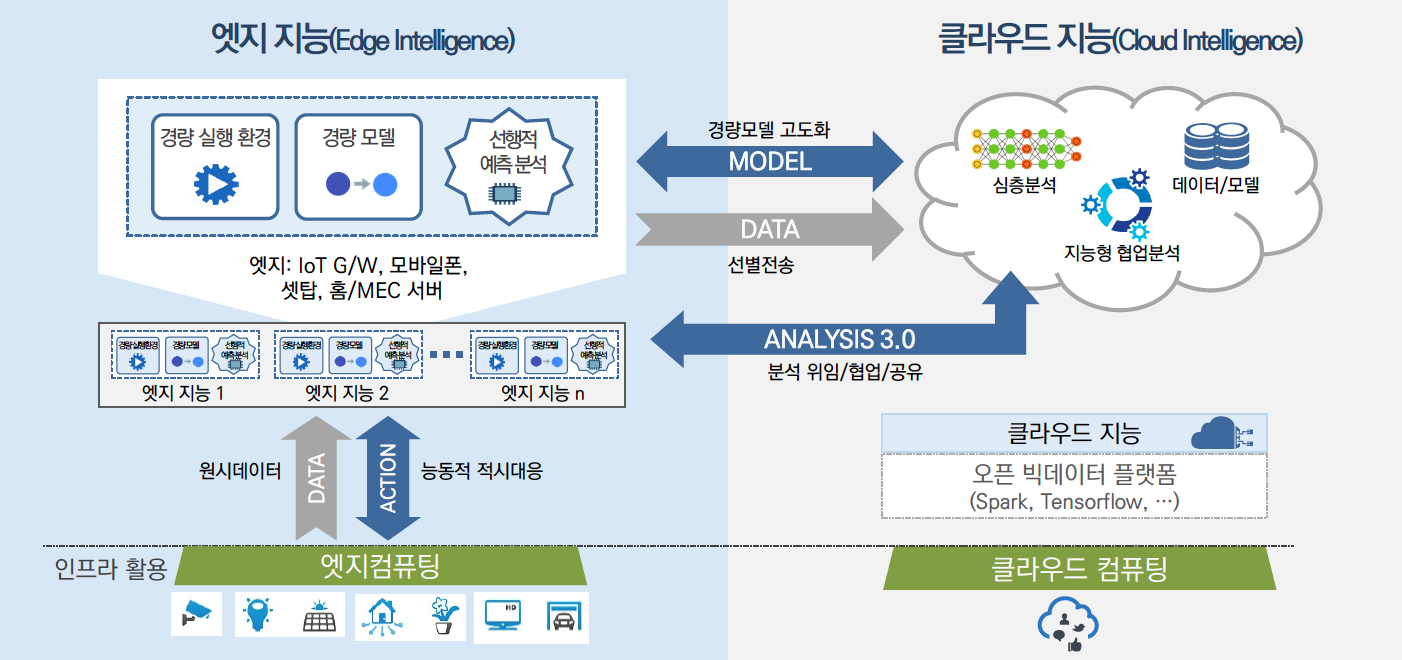

Development of Big Data Edge Analytics SW Technology for Load Balancing and Activet Timely Response

Yong-Ju Lee (Project Investigator, ‘18~’20) [Homepage]

– In this research, we studied various underlying deep learning models in a big data analytics environment. In particular, we developed an edge cloud framework.

📝 Publications

-

HoliSafe: Holistic Safety Benchmarking and Modeling with Safety Meta Token for Vision-Language Model

Youngwan Lee, Kangsan Kim, Kwanyong Park, Ilchae Jung, Sujin Jang, Seanie Lee, Yong-Ju Lee, Sung Ju Hwang

arXiv

[paper] -

Exploring Pretrained Diffusion Representation for Visual-Language Models

Ilchae Jung, Soojin Jang, Youngwan Lee, Yong-Ju Lee

ICCV 2025 Workshop on “Knowledge-Intensive Multimodal Reasoning”

[paper] -

Enhancing Region-Level Reasoning in Vision-Language Models with Complementary Visual Focus

Soojin Jang, Kwanyong Park, Ilchae Jung, Yong-Ju Lee

ICCV 2025 Workshop on “Knowledge-Intensive Multimodal Reasoning”

[paper] -

Dual-Stream Former: A Dual-Branch Transformer Architecture for Visual Speech Recognition

Sanghun Jeon, Jieun Lee, Yong-Ju Lee

MDPI AI Journal

[paper] -

KOALA: Empirical Lessons Toward Memory-Efficient and Fast Diffusion Models for Text-to-Image Synthesis

Youngwan Lee, Kwanyong Park, Yoorhim Cho, Yong-Ju Lee, Sung Ju Hwang

Conference on Neural Information Processing Systems(NeurIPS) 2024

[paper] [demo] [models] -

A Multimodal Chain of Tools for Described Object Detection

Kwanyong Park, Youngwan Lee, Yong-Ju Lee

Conference on Neural Information Processing Systems Workshop(NeurIPS) 2024

[paper] -

Language-only Efficient Prompt Learning for Zero-shot Composed Image Retrieval

Seongwon Lee, Yong-Ju Lee

European Conference on Computer Vision Workshop(ECCV) 2024

[paper] -

DiT-Pruner: Pruning Diffusion Transformer Models for Text-to-Image Synthesis Using Human Preference Scores

Youngwan Lee, Yong-Ju Lee, Sung Ju Hwang

European Conference on Computer Vision Workshop(ECCV) 2024

[paper] -

KOALA: Fast and Memory-Efficient Latent Diffusion Models via Self-Attention Distillation

Youngwan Lee, Kwanyong Park, Yoorhim Cho, Yong-Ju Lee, Sung Ju Hwang

IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshop(CVPR) 2024

[Project page][paper][code] -

Multimodal AudioVisual Speech Recognition Architecture Using a Three-Feature Multi Fusion Method for Noise-Robust Systems

Sanghun Jeon, Jieun Lee, Dohyeon Yeo, Yong-Ju Lee, SeungJun Kim

ETRI Journal 2024

[paper] -

Block-wise Word Embedding Compression Revisited: Better Weighting and Structuring

Jong-Ryul Lee, Yong-Ju Lee, Yong-Hyuk Moon

Empirical Methods in Natural Language Processing(EMNLP) 2021

[paper] -

Efficient Approximation of Filters for High-Accuracy Binary Convolutional Neural Networks

Junyong Park, Yong-Hyuk Moon, Yong-Ju Lee

European Conference on Computer Vision Workshop(ECCV) 2020

[paper] -

Long Short-Term Memory Recurrent Neural Network for Urban Traffic Prediction: A Case Study of Seoul

Yong-Ju Lee, OkGee Min

IEEE International Conference on Intelligent Transportation Systems(ITSC) 2018

[paper] -

Adaptive delivery and handoff management framework for multimedia session mobility

Yong-Ju Lee

Information Sciences 2014

[paper] -

Video block device for user-friendly delivery in IaaS clouds

Yong-Ju Lee

The Journal of Supercomputing 2014

[paper] -

BitNBD: BitTorrent-based network block device for provisioning virtual machines in IaaS clouds

Yong-Ju Lee,Hag-Young Kim, Cheol-Hoon Lee

IEICE transactions on information and systems 2011

[paper] -

Cell approximation method in quorum systems for minimizing access time

Yong-Ju Lee,Hag-Young Kim, Cheol-Hoon Lee

Cluster Computing 2009

[paper]

💬 Invited Talks and Promotions

- 2025.11, Promotional Video for Safe LLaVA(AI Safety Model)

- 2025.07, 산업안전보건의 달(공공분야 AI 도입사례 및 AI Safety 연구)

- 2025.06, TJB 대덕의 도전자들 (한국전자통신연구원)

- 2025.04, Awarded the Prime Minister’s Commendation in celebration of the 2025 Science, Technology, Information and Communication Day(2025년 과학기술정보통신의날 국무총리표창)

- 2025.01, Promotional Video for Collaborative Intelligence of Agents

- 2024.11, ICT R&D Week 2024

- 2024.09, Agent Collaboration Intelligence(CLARA-ROBOT) in ETRI

- 2024.06, Genertive Visual Intelligence in ETRI AI Conference 2024

- 2024.04 ETRI WebZine 2024/04

- 2024.01 ETRI Generative AI promotion 2024/01

- 2023 Agent Collaboration Project Overview